Education technology applications are continually being invented, and recent AI innovations have only increased that rate of innovation. While there are widely-accepted approaches to evaluate stable, late-stage products (e.g., Randomized Controlled Trials), there is much less clarity about how to conduct these evaluations at earlier stages of development. Given the potential risks in AI-powered solutions due to potential hallucinations and concerns about fairness, early evaluations are more important than ever.

Eedi Showing How AI Tutoring Can Deliver Personalized Learning Safely and Effectively

Education technology applications are continually being invented, and recent AI innovations have only increased that rate of innovation. While there are widely-accepted approaches to evaluate stable, late-stage products (e.g., Randomized Controlled Trials), there is much less clarity about how to conduct these evaluations at earlier stages of development. Given the potential risks in AI-powered solutions due to potential hallucinations and concerns about fairness, early evaluations are more important than ever.

Creating A Responsive & Systematic Approach to Early EdTech Evidence: The LEVI Trialing Hub’s Matrix

Education technology applications are continually being invented, and recent AI innovations have only increased that rate of innovation. While there are widely-accepted approaches to evaluate stable, late-stage products (e.g., Randomized Controlled Trials), there is much less clarity about how to conduct these evaluations at earlier stages of development. Given the potential risks in AI-powered solutions due to potential hallucinations and concerns about fairness, early evaluations are more important than ever.

Five New ChatGPT-Based Tools from LEVI

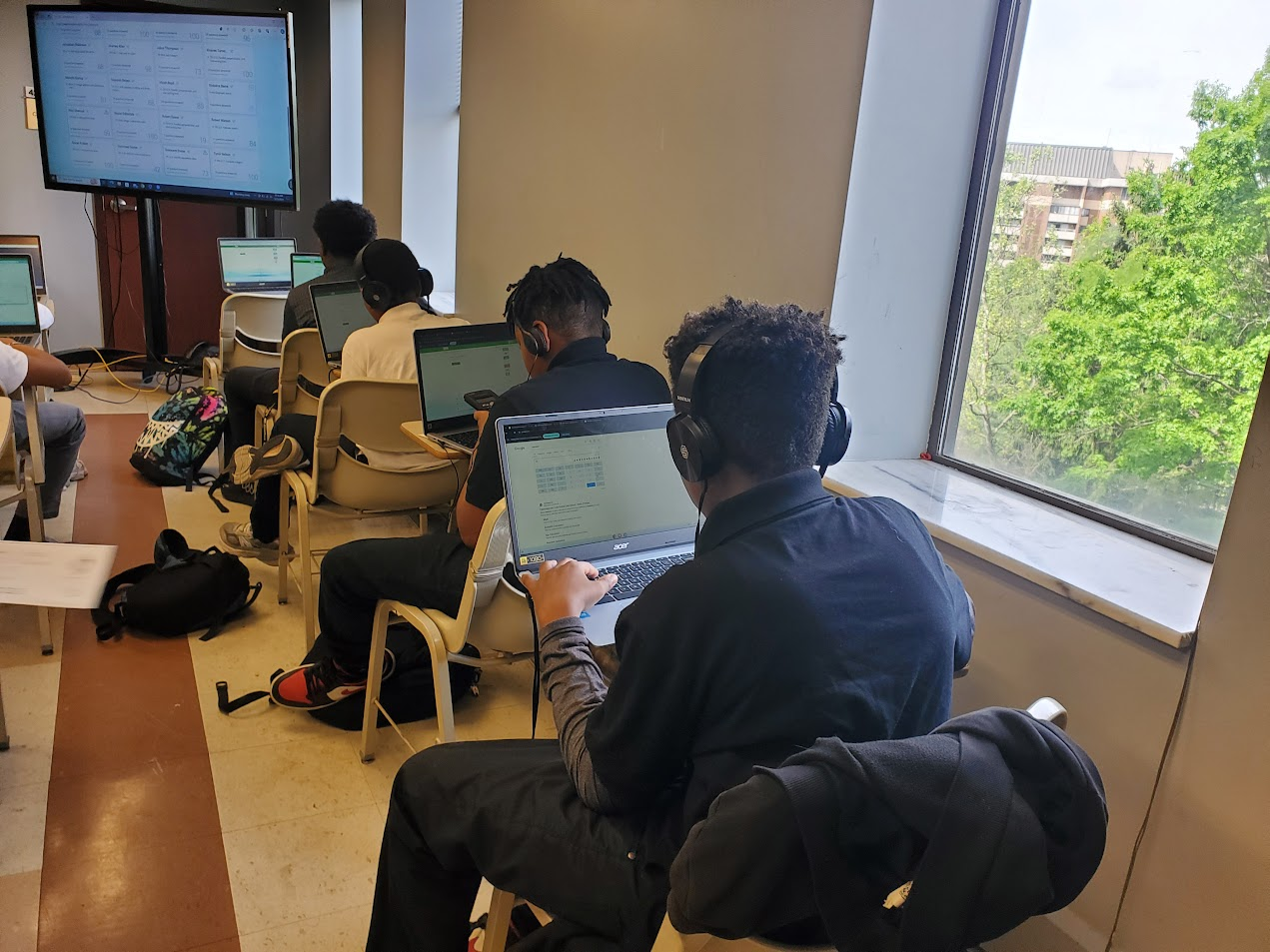

The Learning Engineering Virtual Institute (LEVI) created an AI Lab to help develop new ChatGPT-based tools. LEVI is supported by the Walton Family Foundation, and through an extensive collaboration with educators, students, and parents, the AI Lab developed five new pilot tools that address critical issues in education.

CMU PLUS: Using Tutoring And AI To Overcome Math Anxiety

PLUS, part of the Learning Engineering Virtual Institute, is currently used in 13 schools in four states, reaching an estimated 2,800 students. Researchers have determined that students who use the platform improve their math skills and may even double their rate of math learning. Read about how one student ALSO overcame his math anxiety by using PLUS.