Rising Academy’s Rori AI Chatbot: “It has built my confidence” Last spring, Victor Appiah, a school leader at Rising Academy – Omega in Ghana, introduced Rising Academies’ ‘Rori’ AI-powered chatbot to his third grade students. For many students, this was their first time using a smartphone, and the excitement has been palpable. “They’re loving it,” […]

Minimizing And Mitigating Bias With The Fairness Hub

Because artificial intelligence or machine learning models are trained on data from different contexts, they are also susceptible to biases introduced through the selection and processing of datasets. Ensuring that learned models do not introduce or amplify systematic biases against underrepresented or historically marginalized groups is critical. This guide from the Fairness Hub serves as a starting point for more contextualized examinations of bias and fairness by providing (1) a simple metric for measuring bias, (2) an overview of bias mitigation strategies, (3) an overview of bias analysis and mitigation toolkits, and (4) a quick demonstration of how to measure and mitigate bias with the help of a toolkit.

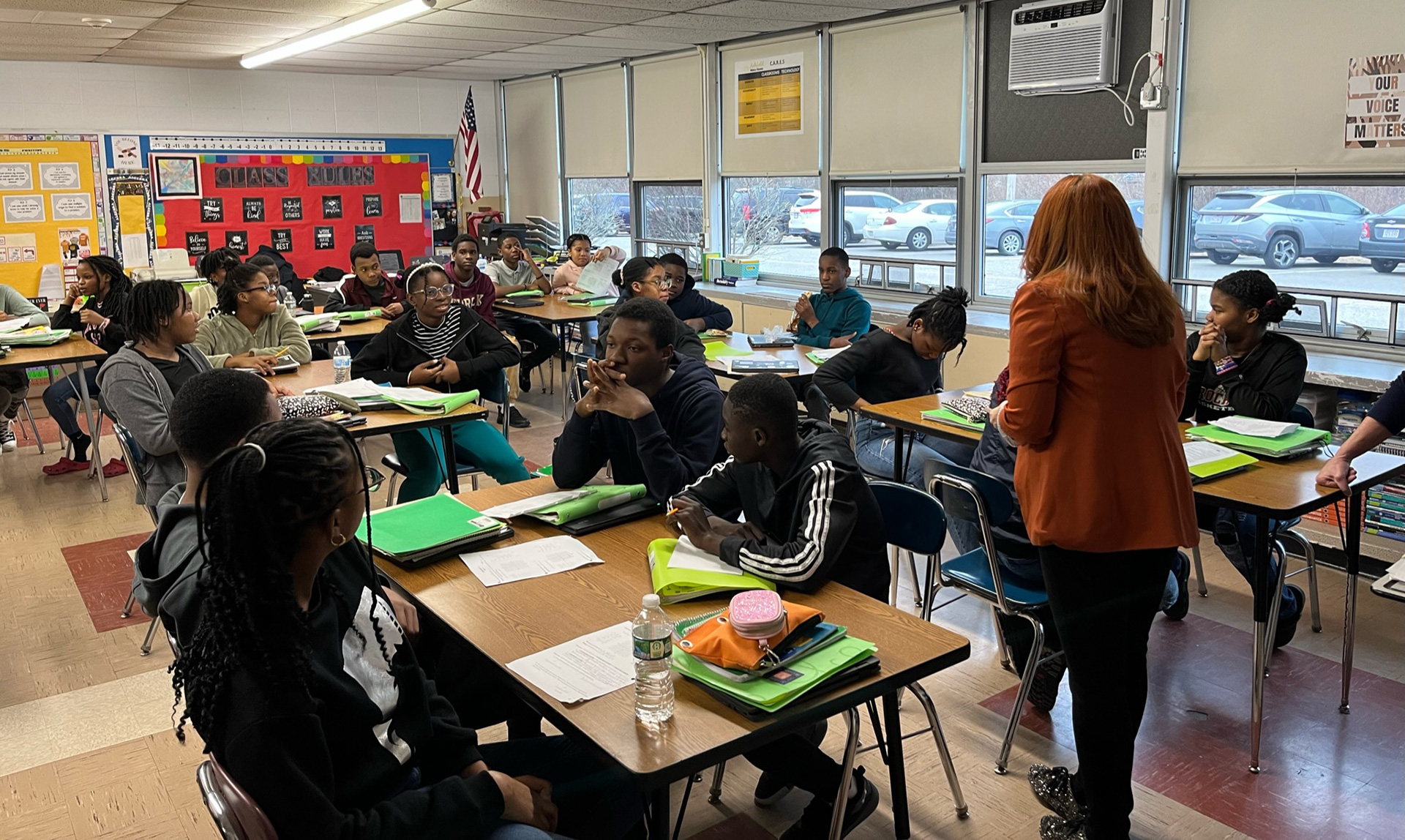

Carnegie Learning’s MATHstream: Merging the Hottest Trends in Tech to Engage Math Students

Born out of Carnegie Mellon University 25 years ago, Carnegie Learning has been on the cusp of cutting-edge educational technology for nearly a quarter-century, fine-tuning products to help students learn mathematics best via years of data analytics and software improvements.